This article presents a theory for understanding intelligence. By studying what “intelligence” means to us we define a trivial example implementation, then by studying it's faults expand on the theory with an understandable representation and apply the resulting theory on the human brain to verify it, and finally on a hypothetical Artificial Intelligence program to see how one could be implemented.

Although Intelligence's definition has never been widely agreed upon and remains to be one of those mystical “it's just humans” or “we will know it when we see it” properties [9], we generally like to present the difference between two basic types of entities:

Intelligent entities

Automated or instinctual entities

Intelligence being the domain of human beings and automation the domain of machines, we like to think humans are the only examples of Intelligent entities on Earth [8], while machines and simpler lifeforms on the other hand are purely Automated entities. As one of their primary and relatively well defined properties, Intelligent entities are said to be capable of learning and understanding abstract concepts, whereas Automated entities are preprogrammed with responses to specific actions and do not truly understand anything, responding only according to this existing programming [10].

Given the interdependency of all remaining issues (self, emotions, etc, are all abstract concepts) on this one property, I will assume that learning to understand abstract concepts is thus the primary and only property that an Intelligent entity is required to possess to indeed be intelligent.

“Abstract” is defined as [1]:

Thought of or stated without reference to a specific instance: abstract words like truth and justice.

...and “understanding” as [2]:

Mental process of a person who comprehends; comprehension; personal interpretation: My understanding of the word does not agree with yours.

...further “to comprehend” as [3]:

To take in the meaning, nature, or importance of; grasp.

Thus seen from an objective perspective and in simpler terms, “understanding” is the ability to extract and store information regarding a subject that is usable in an interactive scenario, and “abstract” is a set of properties that do not belong to a specific item. Our typical use of “abstract” is as “an abstraction of...” and usually relates to a group of items (as in “apples” but not necessarily “this apple”).

So the simplest possible intelligent entity is one capable of extracting and fully storing interactively usable information from the outside and associating sets of this information into abstractions also stored within it. Such a trivial system could be a chatterbox program Billy [4], capable of picking up words to be given in response at a later time, and attempting to group them by perceived meaning. While this program will convince none with it's intelligence, it (upon careful inspection) occasionally displays apparent understanding of the meaning of the words, despite hardly containing any hardwired algorithms beyond basic analysis of sentence structure and no sensors to associate the words with what they may be referring to.

The limitations of the simple program presented above are obvious. Though the basic philosophy is correct, the framework of the program is simply too trivial. It only has two inputs. The first is accepting sentences and the other is a sense of time, measured by the lines as they are entered. The program's framework is designed to allow associating words with each other based on the time they are entered and nothing more. To allow creation of more sophisticated Intelligent entities, the principles of operation need to be understood and improved upon.

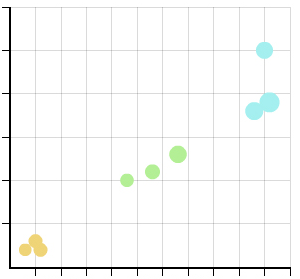

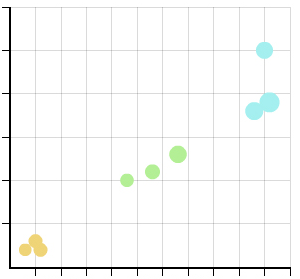

The basic principle of operation here is: Group input values. With just two inputs, Billy's database could be properly presented as 2-dimensional graph, where each possible unique word is presented as a value on the X axis, and time as perceived by the program on the Y axis. Each entry is thus a dot on this graph and the abstractions of Billy's mind are obvious dot groups, since the Billy program groups the entries by proximity on the Y axis. Ideally, the program should be able to see similarities on the X axis as well, for example to recognize typos.

A graph is selected for representation

because it is something that we can understand as a media capable of

storing all possible combinations of values item(x,y) without loosing

information. Such a media is the platform for true understanding. For

example, an improper media for storing combinations of 3 independent

inputs, like a 2 dimensional projection of a 3 dimensional graph,

could not store item(x,y,z) as 3 fully independent inputs; if an

entity was to use this media to learn about a subject, a person

interrogating it would quickly discover the lack of comprehension of

the nature of the Z input and conclude that the entity does not

understand*. By containing all the information, the entity allows

itself to derive relevant information as it is needed.

*

= What is missing here?

To expand on this idea, we introduce more inputs and hence more dimensions to our graph. But because the inputs are simple analog values, a real life system would potentially have thousands or millions of inputs (say, the component color pixel values on a CCD optical sensor), where imagining a 1000-dimensional graph is no longer so straightforward, yet the same simple storing and grouping principles (proximity in meaning) still apply. The groups would still have to be defined in some way, and while this may be done with the simple approach of using points close to each other to define the boundaries of an abstraction, a more sophisticated approach may be assigning each dot an abstract dot and then “tugging” the abstract dots together whenever they appear at the same time, in effect giving each combination of sensory input an abstract counterpart, which may slowly morph into a different meaning but still directly coresponds to it.

Technically, if the theory above is correct, we should be able to explain an existing Intelligent entity's inner workings with it. A human brain is widely recognized as an Intelligent entity [8].

The human brain is composed of neurons, some of which reach outside of the brain to connect the senses to the neurons within the brain. These senses may be considered to be inputs. [5] The neurons form connections within the brain. According to the theory above, I would hypothesize that the physical connection pathways themselves represent the information stored, where each connected input terminal could be considered to be a coordinate from the graph mentioned earlier. Connections between separate pathways thus represent abstractions. The number of neurons involved determines the brain's ability to understand details. So while a brain is not a graph, it behaves in much the same way.

However, the brain is only 3 dimensional and it has numerous other structures within it [5] that this theory does not seem to explain. It is reasonable to assume, that the additional structures are what in fact allows the brain to work around this 3 dimensional barrier. The primary feature of what I call the “3 dimensional barrier” is that because of it, there would be no way to store abstract concepts, described by more than 3 independent inputs, since there would simply be no space for pathways needed to create meaningful connections between them all. However the brain's other structures such as the neuron's star shape, glia-cell encased pathways, and ability to use different neurotransmitters [5], seem like ideal tools to overcome this very problem. They allow neurons to reach beyond each other to create connections, or create long distance connections which are near equivalent to the direct ones. Unfortunately these workarounds are not perfect, star-shaped neurons can only reach a small distance, glia-cell encased pathways are not as dense as direct neuron contact, alternate neurotransmitters spread slowly and leave lingering signals and all of these are hardwired and genetically or developmentally dependent. This means that because of the limitations of these additional structures, our ability to understand certain concepts depends on the way they are laid out and this does not depend on the learning process.

Note that according to this theory, all (animal) brains capable of changing their neuron layout, and containing enough neurons to make indirect connections are Intelligent entities.

One of the questions most often raised in relation to the creation of artificial Intelligent entities is: Do our most sophisticated computers have enough capacity to be Intelligent entities? The usual approach to the goal was to attempt to fully simulate the human brain within a computer. There are obvious capacity problems when presented with the challenge of a proper simulation of a biological system, since even global networks of computer clusters are barely able to simulate the folding of a single protein molecule far from real-time [6], where the challenge of correctly simulating a single cell is unimaginably greater, and the challenge of accurately simulating an organ such as a brain in real-time practically out of reach in the foreseeable future.

However, by understanding the mechanism that implements intelligence, we can simplify everything to just implementing intelligence, without all the physical and biological processes that make it happen in a human brain. And because we know what we are trying to achieve, we can also optimize the implementation to the specific platform. For example: On computers there is no issue working beyond the 3 dimensional barrier [7], hence no need for most of the complexity of the human brain.

While the understanding and these optimizations bring the Intelligent entity into the domain of current computers, there is still a lot of design to be done to ensure the computer will be able to store the information in a way that does not impose limits on it's capacity to understand details (deals with precision of the graph coordinates). There is also design to be done to ensure the computer will be able to think quickly enough (deals with efficiency of the point proximity search algorithm, radial searching would be required to find the most related concepts first). It is also important to note that since this theory only works with inputs (which are synonymous to the outputs that result in them), the artificial Intelligent entity would require automated mechanisms which allow it to reproduce any input, which while not having much to do with the challenge of implementing an Intelligent entity will allow one to interface with the world around it. There may also be additional hardwired specifics required for humans to understand the resulting Intelligent entity.

There has been a lot written on the topic of developing Artificial Intelligence, even more said both recently and some time ago. This article does not discuss the usual grim fictional scenarios, nor does it present a spectacular result, instead it presents a reasonable theory for understanding ourselves and give understanding for potentially creating an intelligence much like our own, at the core of which is not destruction or competition, but the reasonable and helpful ability to understand.

Understanding our ability to understand, allows us to be better teachers. Understanding our limits, allows us to acknowledge and grow to overcome them. Beyond that, the sky is the limit.

abstract. (n.d.). The American Heritage® Dictionary of the English Language, Fourth Edition. Retrieved December 06, 2008, from Dictionary.com website: http://dictionary.reference.com/browse/abstract

understanding. (n.d.). The American Heritage® Dictionary of the English Language, Fourth Edition. Retrieved December 06, 2008, from Dictionary.com website: http://dictionary.reference.com/browse/understanding

comprehend. (n.d.). The American Heritage® Dictionary of the English Language, Fourth Edition. Retrieved December 06, 2008, from Dictionary.com website: http://dictionary.reference.com/browse/comprehend

Billy program: http://pritisni.ctrl-alt-del.si/dl/billy.zip

Kandel, ER; Schwartz JH, Jessel TM (2000). Principles of Neural Science, McGraw-Hill Professional. ISBN 9780838577011.

Rosetta global protein folding simulation cluster: http://boinc.bakerlab.org/rosetta/

MathWorld, Dimension: http://mathworld.wolfram.com/Dimension.html

Perloff, R.; Sternberg, R.J.; Urbina, S. (1996). "Intelligence: knowns and unknowns". American Psychologist 51.

John McCarthy, What is Artificial Intelligence?

Discussion on USENET group comp.ai.philosophy: http://groups.google.com/group/comp.ai.philosophy/topics?lnk